Author – Neeraj Kumar, Cloud Engineer

Overview

Service Mesh is a popular solution managing communication between individual microservices in a microservices application. Service mesh layers on top of your Kubernetes infrastructure and is making communications between services over the network safe and reliable. Think about service mesh like a routing and tracking service for a package shipped in the mail: it keeps track of the routing rules and dynamically directs the traffic and package route to accelerate delivery and ensure receipt.

Capabilities

Service mesh allows you to separate the business logic of the application from observability, and network and security policies. It allows you to connect, secure, and monitor your microservices.

- Connect: Service Mesh enables services to discover and talk to each other. It enables intelligent routing to control the flow of traffic and API calls between services/endpoints. These also enable advanced deployment strategies such as blue/green, canaries or rolling upgrades, and more.

- Secure: Service Mesh allows you secure communication between services. It can enforce policies to allow or deny communication. For example – you can configure a policy to deny access to production services from a client service running in a development environment.

- Monitor: Service Mesh enables observability of your distributed microservices system. Service Mesh often integrates out-of-the-box monitoring and tracking tools (such as Prometheus and Jaeger in the case of Kubernetes) to allow you to discover and visualize dependencies between services, traffic flow, API latencies, and tracing.

Solutions

Istio

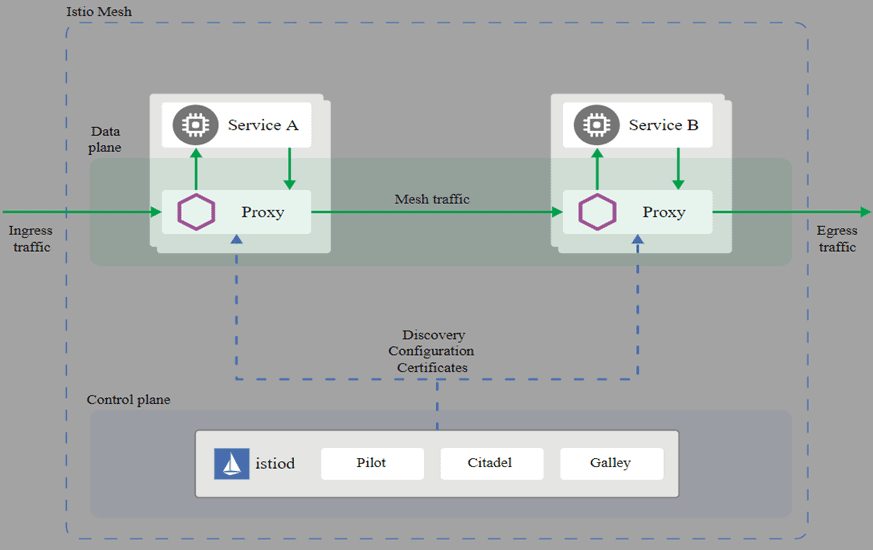

Istio is a full-featured, customizable, and extensible service mesh. It has separated its data and control planes by using a sidecar loaded proxy which caches information so that it does not need to go back to the control plane for every call. The control planes are pods that also run in the Kubernetes cluster, allowing for better resilience if there is a failure of a single pod in any part of the service mesh.

Why use Istio?

Istio makes it easy to create a network of deployed services with load balancing, service-to-service authentication, monitoring, and more, with few or no code changes in service code. You add Istio support to services by deploying a special sidecar proxy throughout your environment that intercepts all network communication between microservices, then configure and manage Istio using its control plane functionality, which includes:

- Automatic load balancing for HTTP, gRPC, WebSocket, and TCP traffic.

- Fine-grained control of traffic behavior with rich routing rules, retries, failovers, and fault injection.

- A pluggable policy layer and configuration API supporting access controls, rate limits, and quotas.

- Automatic metrics, logs, and traces for all traffic within a cluster, including cluster ingress and egress.

- Secure service-to-service communication in a cluster with strong identity-based authentication and authorization.

Features

Istio provides a key capability uniformly across a network of services:

Traffic management

Istio simplifies the configuration of service-level properties like circuit breakers, timeouts, and retries, and makes it a breeze to set up important tasks like A/B testing, canary rollouts, and staged rollouts with percentage-based traffic splits. With better visibility into your traffic, and out-of-box failure recovery features, you can catch issues before they cause problems, making calls more reliable, and your network more robust – no matter what conditions you face.

Security

Istio provides the underlying secure communication channel and manages authentication, authorization, and encryption of service communication at scale. With Istio, service communications are secured by default, letting you enforce policies consistently across diverse protocols and runtimes along with few or no application changes. While Istio is platform-independent, using it with Kubernetes (or infrastructure) network policies, the benefits are even greater, including the ability to secure pod-to-pod or service-to-service communication at the network and application layers.

Observability

Istio’s robust tracking, monitoring, and logging features give you deep insights into your service mesh deployment. Gain a real understanding of how service performance impacts things upstream and downstream with Istio’s monitoring features, while its custom dashboards provide visibility into the performance of all your services and let you see how that performance is affecting your other processes so you can detect and fix issues quickly and efficiently.

Platform Support

Istio is platform-independent and designed to run in a variety of environments, including those spanning Cloud, on-premise, Kubernetes, Mesos, and you can deploy Istio on Kubernetes. Istio currently supports:

- Service deployment on Kubernetes

- Services registered with Consul

- Services running on individual virtual machines

- Multi-cluster service mesh

Linkerd

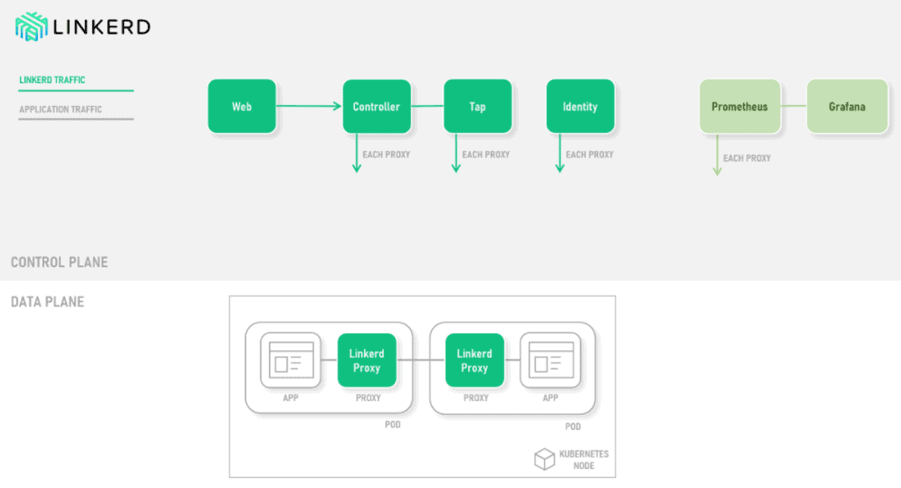

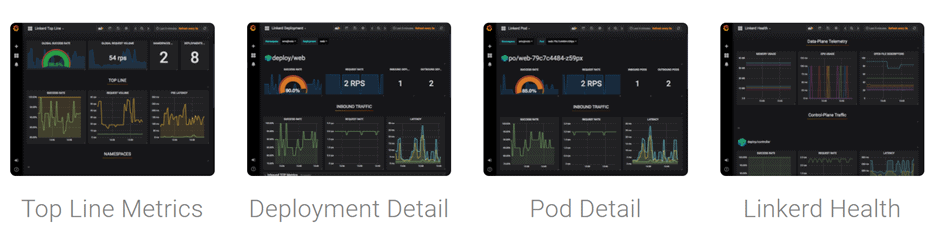

Linkerd is an easy-to-use and lightweight service mesh. It consists of a control plane and a data plane. The control plane is made up of the main controller component, a web component serving the user dashboard, and a metrics component consisting of a modified Prometheus and GrafanaThese components control the proxy configurations across the service mesh and process relevant metrics. The data plane consists of the interconnected Linkerd proxies themselves, which are typically deployed as sidecars into each service container. Prometheus and Grafana

How does it Work?

Linkerd works by installing a set of lightweight, transparent proxies next to each service instance. These proxies automatically handle all traffic to and from the service. Because they’re transparent, these proxies act as highly instrumented out-of-process network stacks, sending telemetry to, and receiving control signals from, the control plane. This design allows Linkerd to measure and manipulate traffic to and from your service without introducing excessive latency.

The Control plane is a set of services that run in a dedicated namespace. These services accomplish various things—aggregating telemetry data, providing a user-facing API, providing control data to the data plane proxies, etc. Together, they drive the behavior of the data plane.

The data plane consists of transparent proxies that are run next to each service instance. These proxies automatically handle all traffic to and from the service. Because they’re transparent, these proxies act as highly instrumented out-of-process network stacks, sending telemetry to, and receiving control signals from, the control plane.

The control plane is made up of:

Controller – which provides API that drives the linkerd CLI and Dashboard and configuration for proxies.

Web – provides the Linkerd dashboard.

Tap – it establishes a connection to watch real-time requests and responses.

Identity – this component provides security which is used for any connection between Linkerd proxies to implement mTLS.

Prometheus – it is used to collect the store all the Linkerd metrics and provides the data used by the CLI, dashboard, and Grafana.

Grafana – this component is used to render and display these dashboards. You can reach these dashboards via links in the Linkerd dashboard itself.

Features

- Automatic mTLS and Proxy Injection – Linkerd automatically enables mutual Transport Layer Security (TLS) for all communication between meshed applications and injects the data plane proxy into your pod-based annotations.

- Traffic Management – Linkerd can perform service-specific retries and timeouts, and dynamically send a portion of traffic to different services.

- Multi-cluster communication

- TCP Proxying and Protocol Detection

Platform support

- Easy to use with just the essential set of capability requirements

- Low latency, low overhead, with a focus on observability and simple traffic management

Consul

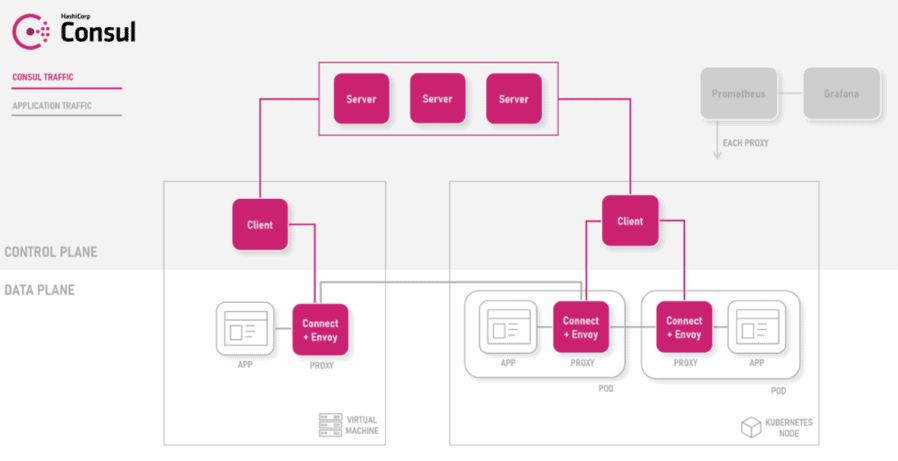

Consul is a full-feature service management framework, and the addition of Connect gives it service discovery capabilities which make it a full-Service Mesh. Consul Connect uses an agent installed on every node as a Daemon Set which communicates with the Envoy sidecar proxies that handle routing & forwarding of traffic.

How does it work?

Consul provides a data plane that is composed of Envoy-based sidecars by default. It has a pluggable proxy architecture. These intelligent proxies control all network traffic in and out of your meshed apps and workloads. Every node that provides services to Consul runs a Consul agent. Running an agent is not required for discovering other services data. The agent is responsible for health checking the services on the node as well as the node itself. The agents talk to one or more consul servers. The Consul servers are where data is stored and replicated. Components of your infrastructure that need to discover other services or nodes can query any of the Consul servers or any of the Consul agents. The agents forward query to the servers automatically.

The control plane manages the configuration and policy via the following components:

Server – A Consul Agent running in Server mode that maintains Consul cluster state.

Client – A Consul Agent running in lightweight Client Mode. Each computes node must have a Client agent running. This client brokers configuration and policy between the workloads and the Consul configuration.

Features

Service Discovery – Clients of Consul can register a service, such as API or MySQL, and other clients can use Consul to discover providers of a given service. Using either DNS or HTTP, applications can easily find the services they depend upon.

Health Checking – Consul clients can provide any number of health checks, either associated with a given service, or with the local node. This information can be used by an operator to monitor cluster health, and it is used by the service discovery components to route traffic away from unhealthy hosts.

Security – Consul can generate and distribute TLS certificates for services to establish mutual TLS connections. It provides an optional Access Control List system that can be used to control access to data and APIs. The Consul agent supports encrypting all of its network traffic.

Multi Datacenter – Consul supports multiple datacenters out of the box. This means users of Consul do not have to worry about building additional layers of abstraction to grow to multiple regions.

Platform support

- VM-based workloads to be included in the service mesh

- Compliance requirements around certificate management

- A multi-cluster service mesh

- Extending existing Consul connected workloads

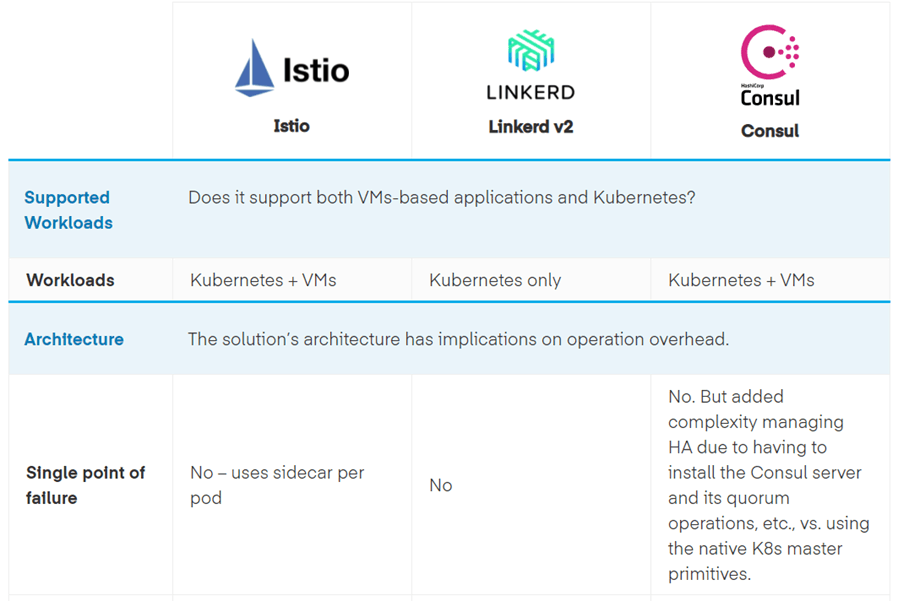

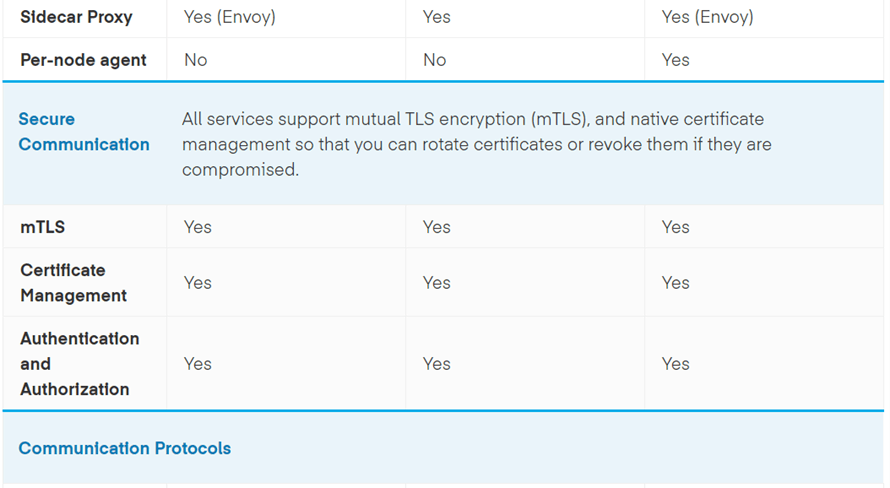

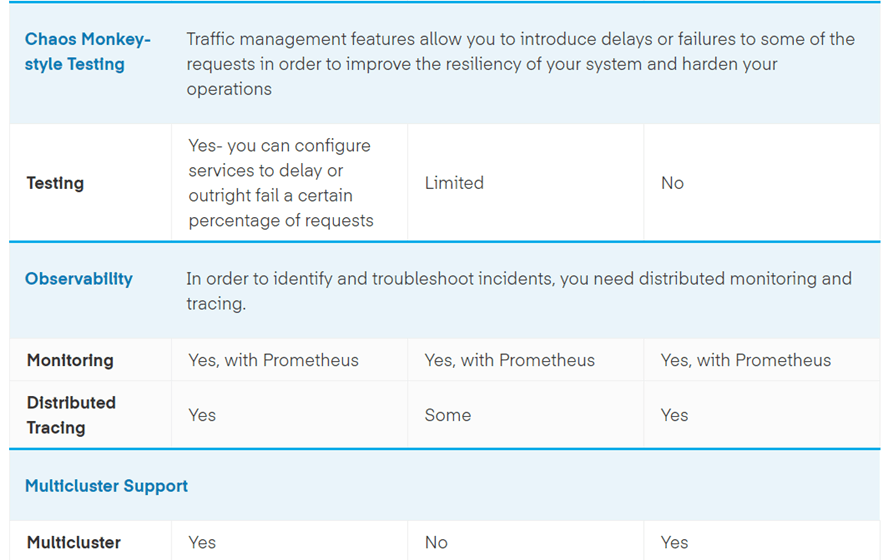

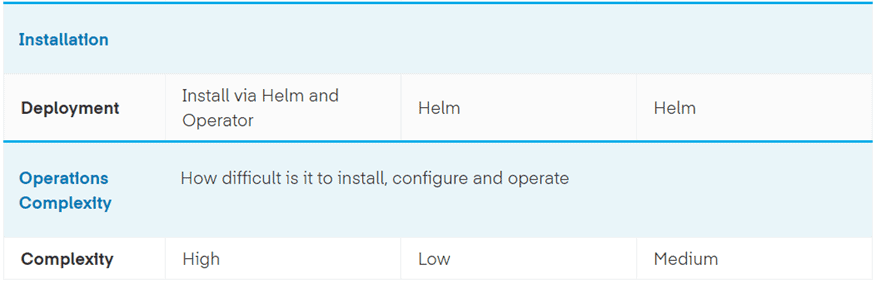

Comparison of Istio, Linkerd, and Consul Connect for Service Mesh